AI in policymaking: When and how governments should use algorithmic insight

AI systems, particularly those trained on large datasets of public opinion, behavior, and social media activity, offer a scalable method for approximating what different population segments might want in policy terms. These systems can be deployed to support governance in various ways, from screening policies for potential public objections to providing recommendations that align more closely with population-level values. In this role, AI functions as an epistemic tool, one that informs but does not decide.

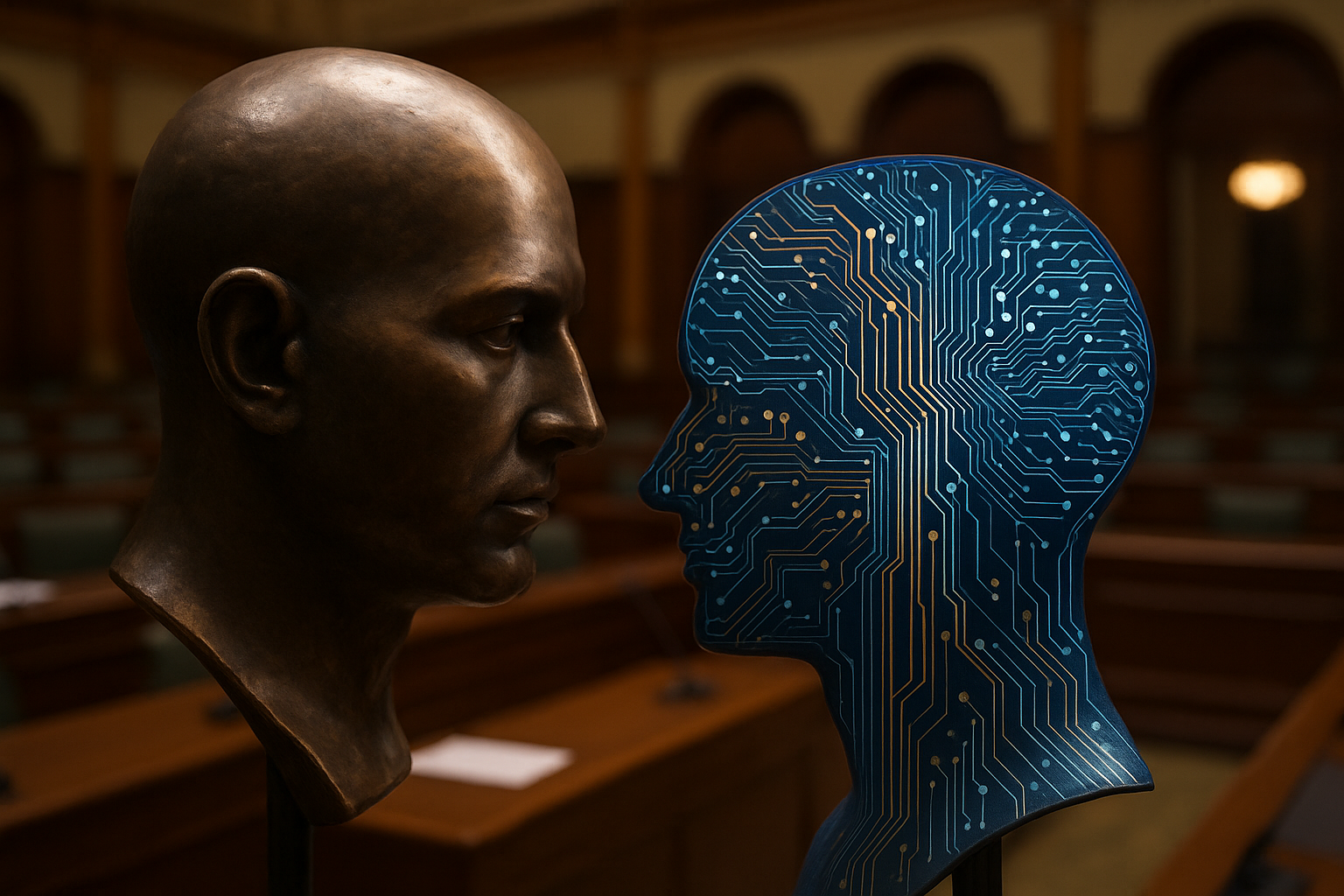

A new study explores how artificial intelligence can be used to enhance democratic governance by predicting public preferences. Published in AI & Society, the paper assesses both the potential and risks of integrating predictive AI tools into policymaking processes, particularly in contexts where traditional democratic mechanisms face limitations due to cost, complexity, or inefficiency.

The research, titled "AI Preference Prediction and Policy Making", outlines conceptual distinctions for how artificial intelligence could be deployed to model and reflect the preferences of individual citizens or entire populations. Rather than suggesting AI as a replacement for democratic institutions, the study presents frameworks for using machine learning models as epistemic tools to support representative or participatory processes in government.

How can AI predict public preferences without undermining democracy?

The study primarily assesses whether AI can be ethically and reliably used to support democratic decision-making by predicting citizen preferences. The authors argue that in many democratic systems, elected representatives often fail to fully reflect the desires of their constituents. While tools such as referenda, citizen assemblies, or consultation processes offer more direct engagement, these methods are often too resource-intensive to apply on a wide scale.

AI systems, particularly those trained on large datasets of public opinion, behavior, and social media activity, offer a scalable method for approximating what different population segments might want in policy terms. These systems can be deployed to support governance in various ways, from screening policies for potential public objections to providing recommendations that align more closely with population-level values. In this role, AI functions as an epistemic tool, one that informs but does not decide.

Importantly, the authors differentiate between using AI to predict preferences and using it to infer values. The former involves forecasting what people might choose in hypothetical policy scenarios, whereas the latter requires more normative assumptions about what outcomes should be prioritized. This distinction is critical, as misinterpreting preference data could lead to misguided conclusions about what is ethically desirable.

Moreover, the researchers acknowledge that AI models, if deployed carelessly, could serve procedural roles that blur the line between advice and decision-making. This raises concerns about legitimacy, transparency, and the erosion of human oversight in public governance. The study does not endorse AI as a decision-maker but advocates for carefully bounded use in advisory capacities that respect democratic integrity.

What are the ethical risks in deploying AI in policy environments?

The study outlines a range of ethical and practical risks associated with AI-based preference prediction in policymaking. One central concern is opacity. Many AI models, especially those based on deep learning, are difficult to interpret or audit. This lack of transparency makes it challenging to trace how predictions are formed or to verify whether they fairly represent diverse viewpoints across a population.

Bias is another critical issue. If training data reflects historical injustices or skewed representations of social groups, the resulting predictions may reinforce existing inequalities. Policymakers who rely on such data may inadvertently marginalize vulnerable communities or favor the preferences of majority groups over others.

Additionally, the use of AI for policy justification, where governments cite AI predictions as validation for certain decisions, presents a potential pathway for manipulation. Instead of enabling more responsive governance, AI might be used to retroactively legitimize controversial or unpopular policies under the guise of algorithmic neutrality.

The authors also highlight the risk of disengagement. As AI systems become more capable of anticipating public opinion, there may be reduced incentive for civic participation. Citizens might be less motivated to vote, deliberate, or voice opinions if they believe AI tools can already model their preferences effectively. This could lead to a technocratic drift in governance, where human deliberation is devalued in favor of automated consensus models.

Despite these risks, the study emphasizes that the ethical pitfalls are not insurmountable. Many can be mitigated through value-sensitive design principles, rigorous oversight, and public transparency. Crucially, the authors suggest that AI should not be seen as a substitute for democratic engagement but as a supplement that requires careful integration into existing institutional frameworks.

When should AI be used in policymaking and under what conditions?

The study provides guidelines for when and how AI can be responsibly used in the context of democratic policymaking. The key lies in clarifying the function AI is intended to serve: advisory rather than authoritative, reflective rather than prescriptive.

AI tools are most appropriate in the early stages of policy development, where they can help identify emerging trends in public opinion, test the acceptability of proposed initiatives, or highlight overlooked stakeholder concerns. For instance, predictive models could be used to simulate public responses to tax changes, health interventions, or climate regulations before these policies are enacted. This would allow decision-makers to fine-tune proposals with greater alignment to the electorate's priorities.

Another appropriate use case is retrospective analysis, where AI systems help governments understand how past decisions aligned with or diverged from public sentiment. This could improve accountability and serve as feedback for future governance.

However, the authors insist on several preconditions for ethical deployment. These include transparency about the model's data sources and limitations, public input into how predictions are interpreted, and institutional safeguards to prevent overreliance on automated outputs. Ethical frameworks must also address questions of consent and privacy, particularly when individual-level prediction is involved.

The study calls for a cautious but optimistic approach that acknowledges AI’s power while remaining firmly grounded in the principles of democratic legitimacy and social justice.

- FIRST PUBLISHED IN:

- Devdiscourse