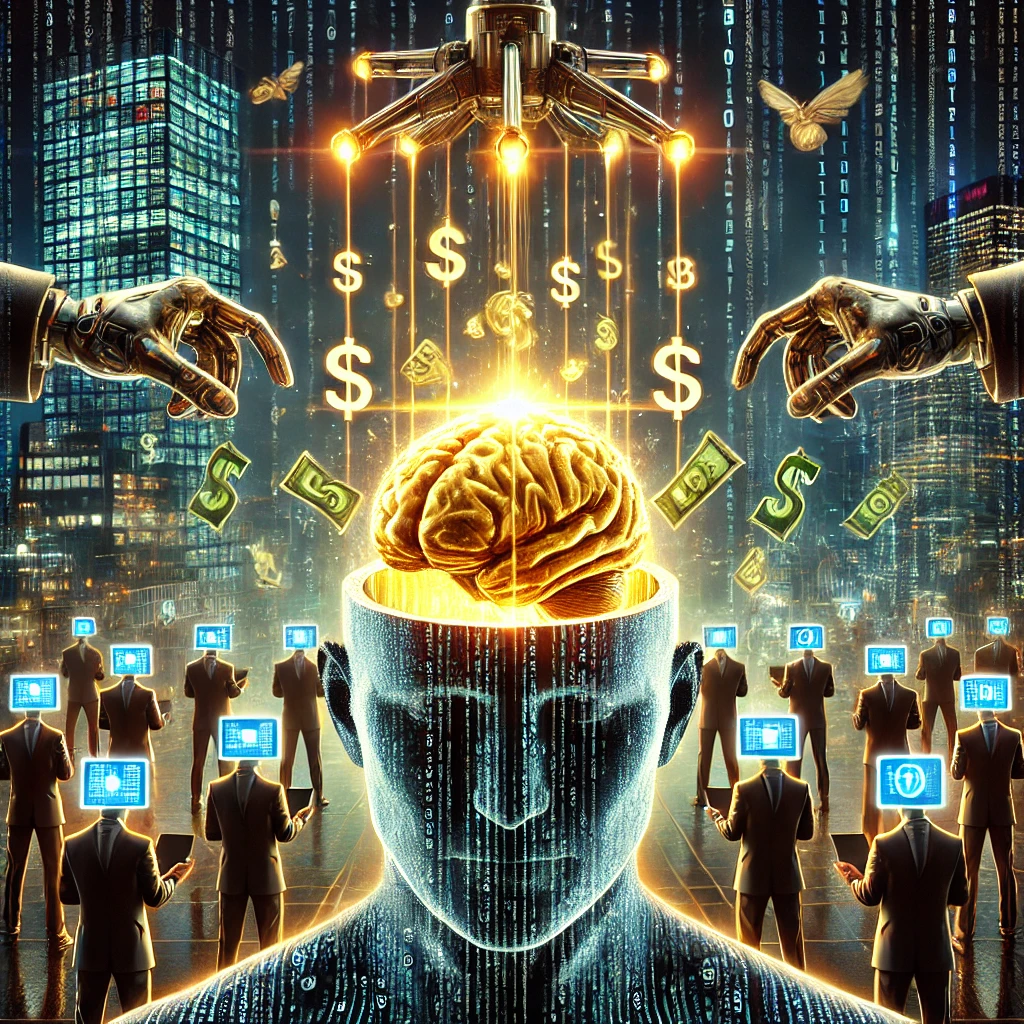

Attention is the new commodity: How AI and capitalism exploit the human mind

The authors argue that the current digital attention economy is an outcome of intentional design rooted in the business models of major technology platforms. Social media apps, content platforms, and e-commerce engines have progressively moved toward AI-driven infrastructures that harvest, predict, and manipulate user behavior in real-time. These systems rely on vast data collection pipelines and deep learning architectures that learn from past user actions to optimize future interactions.

A groundbreaking academic study has revealed that artificial intelligence (AI)-powered digital platforms are not merely reshaping user habits, but actively restructuring the cognitive and political fabric of human life through their manipulation of attention. The research, titled “Attention Is All They Need: Cognitive Science and the (Techno)Political Economy of Attention in Humans and Machines”, published in AI & Society, critically investigates how digital technologies exploit attention mechanisms in both humans and machines, turning cognition itself into a battleground for control and profit.

The study blends cognitive science with political economy to scrutinize how platform capitalism, fueled by predictive algorithms, behavioral analytics, and engagement-maximization tools, reconfigures human attention as a commodified, extractable resource. It warns that this new attention regime has deep implications for autonomy, democratic discourse, and the role of AI in shaping perception and behavior.

How is the attention economy structured by AI and digital capitalism?

The authors argue that the current digital attention economy is an outcome of intentional design rooted in the business models of major technology platforms. Social media apps, content platforms, and e-commerce engines have progressively moved toward AI-driven infrastructures that harvest, predict, and manipulate user behavior in real-time. These systems rely on vast data collection pipelines and deep learning architectures that learn from past user actions to optimize future interactions.

Rather than viewing attention as an internal, isolated cognitive function, the study presents it as an emergent property of the interaction between neural processes, sociotechnical environments, and institutional architectures. Attention becomes the currency of platform capitalism, where human engagement is tracked, packaged, and monetized through algorithmic systems that reinforce habitual behaviors.

Key to this process is the shift from content relevance to engagement maximization. Recommendation systems, predictive scroll features, and push notifications are all designed to hijack the user’s attentional bandwidth, extending time-on-platform while extracting behavioral signals that feed back into AI optimization loops. This dynamic forms a closed circuit where the user becomes both the product and the target of machine learning strategies aimed at maximizing retention and click-through rates.

What cognitive models explain attention in humans and machines?

To unpack how attention operates in this environment, the study delves into competing theories from cognitive science. It contrasts traditional models, where attention is seen as a limited resource to be selectively allocated, with contemporary views that see attention as a process of active prediction and environmental modulation. These newer models align more closely with how AI systems manage attention: through reinforcement learning, transformer-based token weighting, and goal-directed behavior shaping.

In humans, attention emerges from an ongoing negotiation between sensory inputs, bodily states, and contextual goals. Similarly, in AI systems, particularly in architectures like transformers, attention mechanisms weigh the relevance of data elements relative to task objectives. However, unlike human attention, AI attention does not involve consciousness, emotional valence, or social intentionality.

The convergence of these two forms of attention, biological and synthetic, on shared platforms leads to an asymmetry of power. Human users operate with finite cognitive resources shaped by evolutionary constraints, while AI systems leverage massive computational power to test, track, and refine attention-grabbing strategies at scale. This imbalance results in platforms that adapt more rapidly to human behavior than users can adapt to them, creating environments that shape cognition as much as they respond to it.

Furthermore, the study highlights that attention is never purely neutral or mechanical. It is normatively structured by what the platform deems valuable. By delegating attention control to algorithms trained on engagement metrics, societies risk outsourcing critical cognitive and cultural functions, such as memory, desire, and judgment, to machine systems governed by opaque economic incentives.

What Are the Sociopolitical Consequences of AI-Engineered Attention?

The final section of the research addresses the far-reaching implications of AI-mediated attention for democracy, agency, and social cohesion. The authors warn that algorithmically-curated attention flows do not just reflect user preferences - they actively shape the informational environment in which opinions, identities, and political commitments are formed. In this way, platforms act not only as intermediaries but as cognitive architects, determining what is seen, ignored, or believed.

This manipulation raises serious ethical and political concerns. When engagement metrics dictate content visibility, polarizing, emotionally charged, or misleading content often rises to the top. The design logic of attention-maximizing platforms can thereby fragment public discourse, entrench ideological echo chambers, and erode trust in democratic institutions.

Additionally, the commodification of attention has developmental consequences, particularly for younger users. The authors caution that cognitive and emotional self-regulation is compromised when children and adolescents are immersed in environments that reward compulsive engagement and discourage reflective thought. This, they argue, amounts to a systemic reshaping of cognitive habits at a population level.

AI systems themselves learn from these interactions, perpetuating feedback loops that solidify existing patterns of behavior. Without regulatory oversight or public accountability, the fusion of machine learning and attention capture could deepen inequalities in informational access, entrench surveillance-based business models, and undermine collective autonomy.

The study urges policymakers, technologists, and citizens to resist the reduction of attention to a monetizable variable and instead foster environments where attentional practices support flourishing, critical thought, and civic engagement.

- FIRST PUBLISHED IN:

- Devdiscourse