Aesthetic realism drives public trust in AI deepfakes

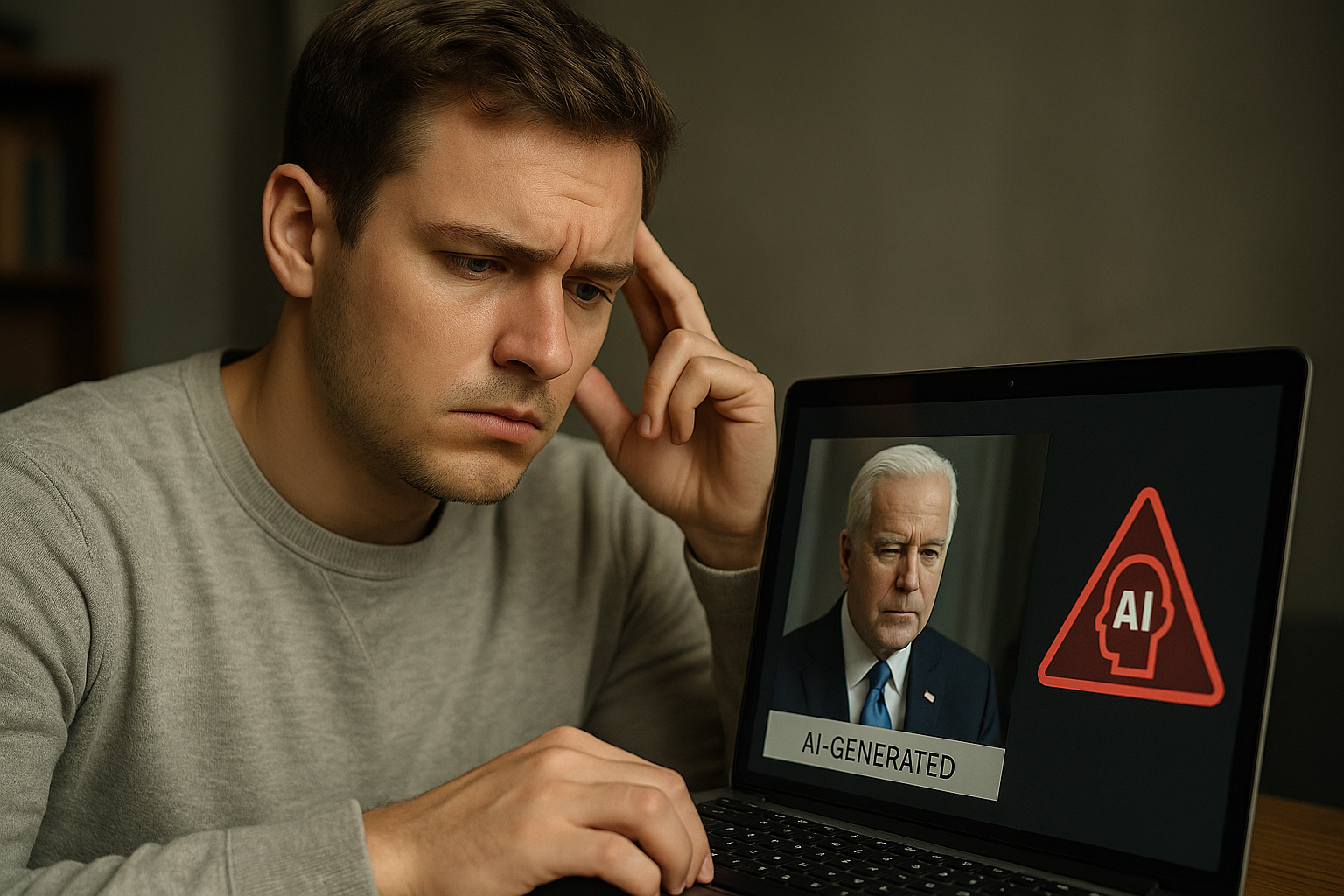

A new study reveals that the hyper-realistic look of AI-generated images, not their emotional intensity, significantly sways public perception of authenticity, even when the content is entirely fabricated. The research, titled "Deciphering authenticity in the age of AI: how AI‑generated disinformation images and AI detection tools influence judgements of authenticity", was published in the journal AI & Society.

The study surveyed 292 UK-based participants and tested how people interpret AI-generated disinformation images, especially under the influence of image realism, emotional tone, and machine-generated authenticity verdicts. The findings expose how aesthetic realism can manipulate public belief, raising urgent questions about society’s readiness to counteract visual deception in the AI age.

Do realistic-looking images fool us more easily?

The study answers a key question: which visual features of AI-generated images most strongly influence perceptions of authenticity? Using a controlled experiment, participants were shown a selection of AI-generated and real disinformation images varying in two characteristics - how realistic they looked (aesthetic realism) and how emotionally charged they appeared (emotional salience). They were asked to judge whether the images were real or AI-generated and rate their confidence in those judgments.

The data demonstrated that aesthetic realism alone played a statistically significant role in shaping participants’ authenticity judgments. Images with high aesthetic realism were more likely to be judged as real, even when they were entirely synthetic. Interestingly, emotional salience, despite being a hallmark of traditional disinformation strategies, had no measurable impact on whether participants thought the image was real or fake.

Moreover, participants expressed lower confidence in their judgments when dealing with more realistic-looking images. Paradoxically, the more realistic an AI-generated image appeared, the less certain individuals felt about their assessment, suggesting a destabilizing effect on visual literacy.

These findings not only confirm the deceptive power of AI-generated visuals but also highlight the limitations of emotional cues in shaping viewer trust in the age of hyper-realistic deepfakes.

Can AI detection tools improve human judgement?

A study also explored whether machine-based AI detection tools could help individuals make more accurate assessments. Participants were shown a verdict from an AI detector (Hive Moderation) after making their initial judgment and were given the option to change their answer.

The results showed that people were more likely to change their initial authenticity judgments in response to the AI detector's verdict if the image was highly realistic or if they lacked confidence in their original judgment. However, emotional salience still had no significant effect on whether they would update their assessment.

These outcomes suggest the emergence of a "machine heuristic" - a reliance on machine-generated information as a shortcut for decision-making under uncertainty. Participants who felt uncertain were particularly inclined to defer to the AI tool, highlighting the dual-edged nature of such systems. While this reliance may aid in filtering disinformation, it also opens the door to over-dependence and the risk of manipulation through erroneous or biased machine verdicts.

The study also found that positive attitudes toward AI correlated with a greater likelihood of accepting the AI tool’s assessment. Conversely, participants with negative attitudes toward AI exhibited higher confidence in their original judgments but were less likely to revise them, revealing a complex dynamic between trust, skepticism, and reliance on automation.

What are the broader implications for misinformation mitigation?

The research sheds light on the growing challenge of distinguishing fact from fabrication in a visual culture increasingly shaped by AI. The finding that aesthetic realism, not emotional manipulation, drives perception of authenticity disrupts conventional assumptions in disinformation studies. It also emphasizes the limitations of cognitive shortcuts, or heuristics, in the modern information environment.

With AI-generated content becoming more advanced and less visually distinguishable from reality, there is an urgent need to improve public literacy on detecting subtle signs of fabrication. However, the study warns against excessive reliance on AI detection tools without public understanding of their limitations. Such tools, if misused or misunderstood, could end up legitimizing disinformation rather than curbing it.

The researchers argue that any future strategies to combat visual disinformation must go beyond surface-level detection. These should include deeper public education on AI manipulation, transparent communication from detection tool developers, and regulatory frameworks like the European Union's AI Act to ensure safety and accountability.

Furthermore, the study calls for future research to explore whether these findings hold in different cultural contexts and with other media types such as AI-generated text, video, and audio. Notably, the current study used still images and focused on a UK sample - a factor that may limit the generalizability of its conclusions across countries and platforms.

- FIRST PUBLISHED IN:

- Devdiscourse