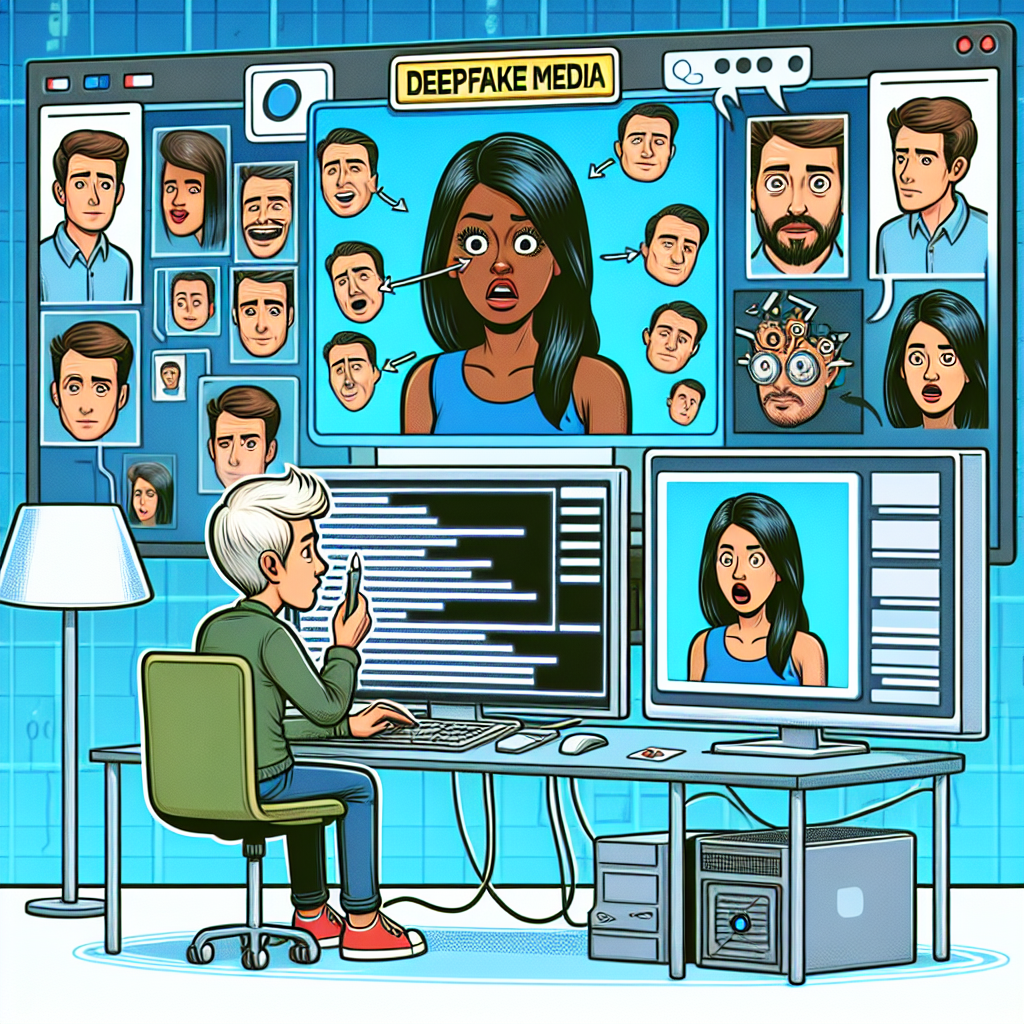

Tackling Deepfake Dilemmas: A Call to Action for Global Standards

The UN's International Telecommunication Union emphasizes the need for advanced tools to combat deepfakes, urging social media platforms to authenticate content. Experts call for global standards and digital verification to restore trust. ITU's AI for Good Summit addressed these challenges, highlighting proactive solutions and public education to counter misinformation.

The United Nations' International Telecommunication Union (ITU) has called for the implementation of advanced tools to detect and eliminate misinformation and deepfake content. This appeal aims to combat the escalating threats of election interference and financial fraud as noted in a recent ITU report unveiled at the 'AI for Good Summit' in Geneva.

Amid growing concerns over the manipulation of multimedia content, the ITU advises content distributors, including social media giants, to employ digital verification tools to authenticate images and videos before dissemination. Bilel Jamoussi, a senior figure within ITU, highlighted the erosion of trust in social media, driven by confusion over genuine vs. fake media.

Industry experts like Leonard Rosenthol from Adobe and Dr. Farzaneh Badiei of Digital Medusa emphasized the necessity of global strategies and standards. They warned against fragmented approaches and stressed the importance of identifying content provenance to maintain trust. In response, ITU is spearheading initiatives to develop robust standards, including video watermarking, to embed crucial provenance information.

ALSO READ

New Bee Health Trust to Lead National Foulbrood Pest Management Plan from July 2025

Brazil's Supreme Court Holds Social Media Accountable: Landmark Ruling Unfolds

New deep learning tool tracks Opioid crisis using social media

EU Intensifies Scrutiny on Meta's Compliance with Antitrust Order

Supreme Court Faces Pivotal Decision on Texas Age-Verification Law for Adult Content