Advanced AI excels at recognizing emotions, not experiencing them

While GPT-4o and Gemini 1.5 Pro matched or exceeded human performance in identifying emotions like happiness, sadness, and surprise, all models struggled more with subtle or ambiguous states such as fear. This suggests that while extensive pretraining equips AI with broad recognition abilities, certain nuanced emotional contexts still present challenges.

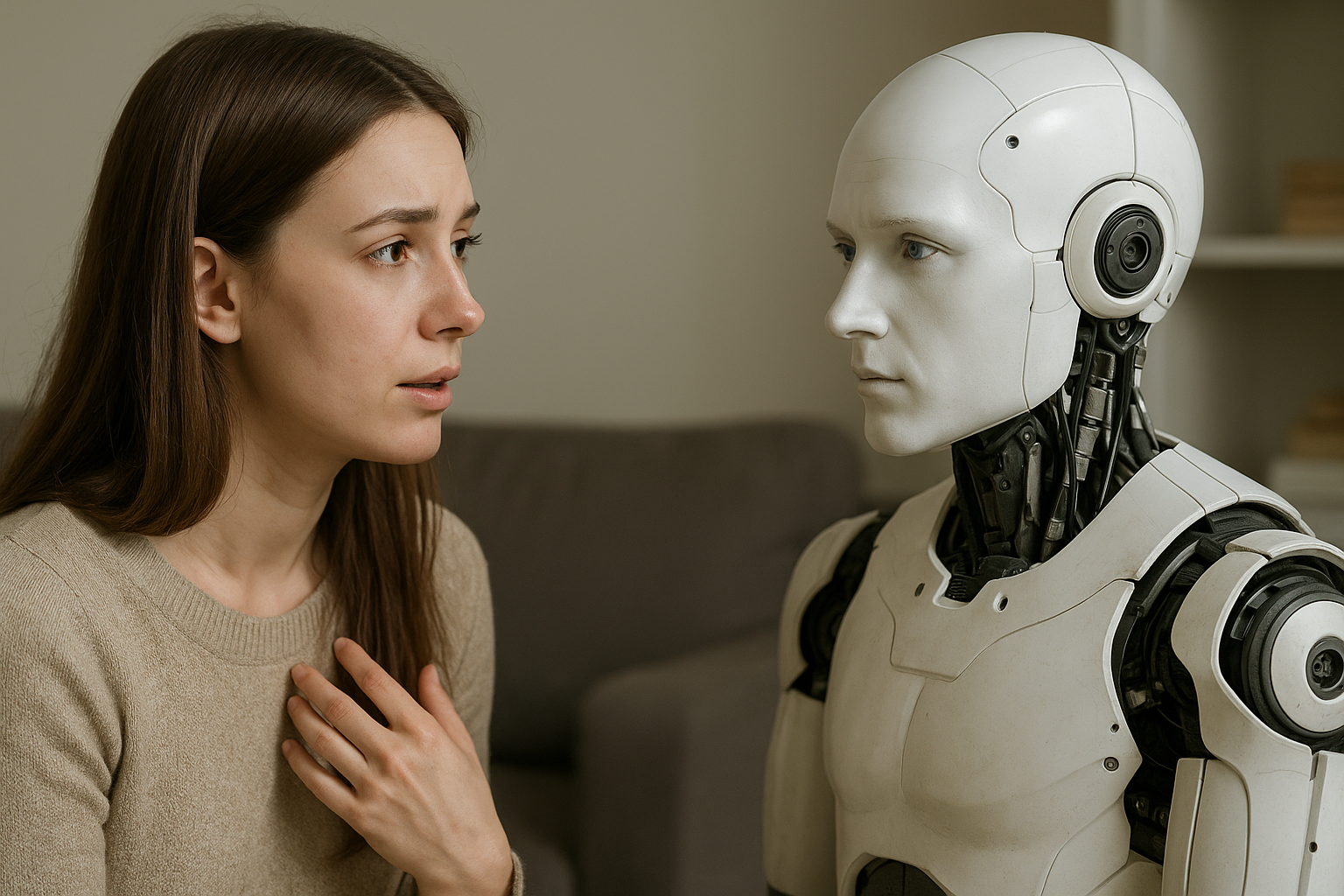

Artificial intelligence systems are now demonstrating cognitive empathy skills that not only rival but exceed those of humans, according to new research. However, their capacity to share and feel emotions, called affective empathy, remains significantly weaker. The study submitted on arXiv offers one of the most comprehensive examinations of empathy in language models to date.

Titled "Heartificial Intelligence: Exploring Empathy in Language Models," the research tested both small (SLMs) and large language models (LLMs) on a series of standardized psychological assessments commonly used to measure empathy in humans. The findings highlight an emerging duality in artificial empathy: an impressive mastery of understanding others’ perspectives, paired with a fundamental inability to authentically share their emotions.

How well can AI understand human thoughts and feelings?

To investigate cognitive empathy, the ability to understand another person’s mental state, the researchers applied rigorous text-based and image-based evaluations. These included the Strange Stories Revised test, which measures interpretation of complex social interactions, and the Situational Test of Emotional Understanding, which assesses recognition of emotions from situational cues. For image-based measurement, the Seeing Emotions in the Eyes test was used to gauge recognition of facial expressions.

Across these tasks, leading LLMs such as GPT-4o, Gemini 1.5 Pro, and Claude 3.5 Sonnet consistently outperformed humans, including groups with formal training in psychology. In text-based scenarios, the highest-scoring models achieved perfect results in interpreting misunderstandings, double bluffs, white lies, and persuasive tactics. Even when compared to top-performing human cohorts, the gap was substantial, with models demonstrating an exceptional ability to process nuanced emotional and social cues.

Image-based tests revealed a more complex picture. While GPT-4o and Gemini 1.5 Pro matched or exceeded human performance in identifying emotions like happiness, sadness, and surprise, all models struggled more with subtle or ambiguous states such as fear. This suggests that while extensive pretraining equips AI with broad recognition abilities, certain nuanced emotional contexts still present challenges.

Can AI experience or share human emotions?

Affective empathy, the capacity to share and emotionally resonate with others’ feelings, was measured using the Toronto Empathy Questionnaire, a self-report instrument widely used in psychological research. Here, the contrast between humans and AI was stark. Even the most advanced LLMs scored far below human participants, whose results reflected the deep emotional resonance shaped by lived experience, cultural context, and neurobiological mechanisms.

The study confirms a core limitation of AI: while models can convincingly simulate the recognition of emotional states, they do not possess genuine feelings. This absence of subjective emotional experience constrains their ability to authentically engage in affective empathy. The authors note that although self-report questionnaires have methodological limitations when applied to AI, the performance gap is too large to attribute solely to measurement issues.

This disparity has practical implications. In real-world interactions, affective empathy is often key to building trust, emotional connection, and therapeutic rapport, areas where AI’s lack of authentic emotional experience may limit its role. However, the same detachment can also be an advantage in contexts where objective, bias-free emotional support is valuable, such as in crisis triage, customer service, or large-scale educational environments.

What does this mean for the future of human-AI collaboration?

The authors argue that the complementary strengths of humans and AI create opportunities for more effective joint problem-solving. In mental health care, for example, AI could deliver consistent, round-the-clock cognitive behavioral therapy support, freeing human therapists to focus on the deeper emotional engagement that patients often require. In education, AI could handle large-scale assessments and provide data-driven feedback, allowing teachers to devote more time to personalized, empathetic student interactions. In conflict resolution, AI could offer impartial analysis and scenario modeling, while human mediators provide the cultural sensitivity and emotional intelligence essential for successful negotiations.

The research suggests that empathy in AI should not be judged solely by its ability to mimic human emotional states. Instead, its value may lie in leveraging cognitive empathy to provide accurate, contextually relevant, and unbiased responses. This approach could redefine how empathy is operationalized in technology, shifting from imitation of human feelings to the creation of supportive, equitable, and scalable systems for human interaction.

The study also highlights the influence of model scale and training on empathy performance. Larger models with more parameters consistently achieved higher scores in cognitive empathy tasks, underscoring the relationship between computational capacity and interpretative skill. Furthermore, targeted fine-tuning with emotion-rich datasets may enhance AI’s ability to recognize and respond to more subtle emotional cues, narrowing current performance gaps.

While the findings position LLMs as powerful tools for tasks requiring perspective-taking and nuanced understanding, they also underscore the irreplaceable role of human affective empathy in situations demanding genuine emotional connection. As AI continues to advance, the challenge will be to integrate these systems in ways that amplify human strengths while compensating for technological limitations, a balance that could transform how society addresses complex social, educational, and healthcare needs.

The authors conclude that rather than viewing AI’s inability to feel emotions as a flaw, it should be seen as a defining feature that, when combined with human emotional intelligence, offers a pathway to more inclusive, scalable, and effective empathy-driven solutions. In doing so, they envision a future where human and machine empathy work in tandem, not in competition, to address some of the most pressing interpersonal challenges of our time.

- FIRST PUBLISHED IN:

- Devdiscourse