Over 50% of public companies now flag AI risks in SEC filings

The surge in AI disclosures coincides with mounting regulatory and investor scrutiny. The SEC has issued multiple warnings against exaggerated or misleading statements about AI in corporate filings, emphasizing the need for accurate, company-specific information. Recent enforcement actions and shareholder lawsuits have underscored the legal and financial risks of inadequate or misleading AI-related disclosures.

A new analysis of corporate filings has revealed that public companies in the United States are increasingly acknowledging the risks posed by artificial intelligence (AI), but many still rely on vague and boilerplate language that obscures critical details.

The research is presented in the paper Are Companies Taking AI Risks Seriously? A Systematic Analysis of Companies’ AI Risk Disclosures in SEC 10-K Forms, scheduled for presentation at the SoGood Workshop of ECML PKDD 2025. The study delivers the first large-scale, systematic review of AI-related risk disclosures across more than 30,000 filings submitted over the past five years.

The growing prevalence of AI risk mentions

The study examined annual SEC 10-K filings, which publicly traded companies in the U.S. are required to submit to report significant business and operational risks. These filings, legally binding and scrutinized by regulators, have become a key measure of how firms assess and communicate AI-related risks.

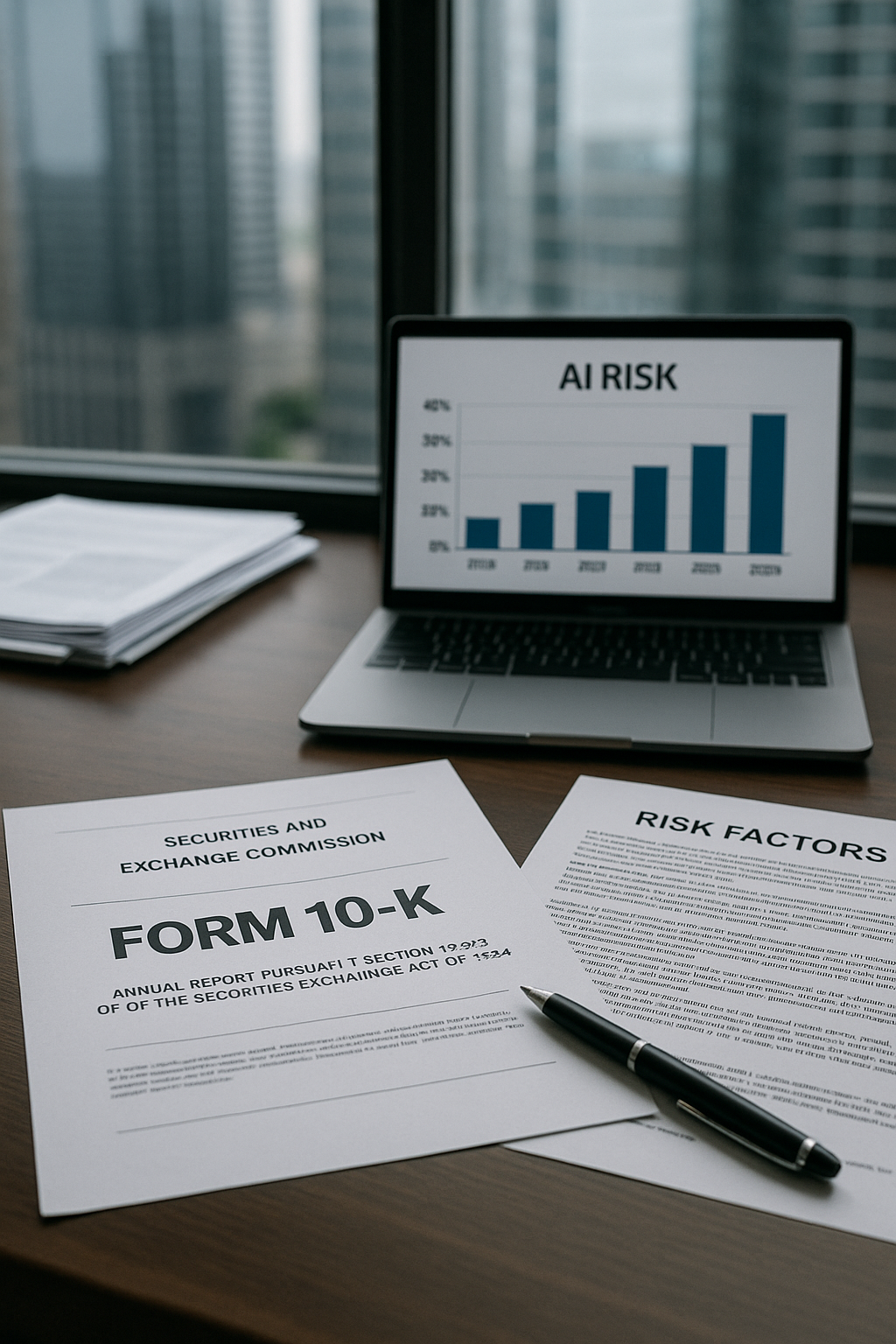

The findings point to a dramatic increase in the visibility of AI within corporate disclosures. In 2020, fewer than 5% of companies made any mention of AI in their filings. By 2024, that number had soared to over 50%, with a particularly sharp rise between 2022 and 2024. This trajectory reflects the rapid integration of AI across industries, fueled by technological advances and heightened regulatory attention.

The research shows that companies are not only integrating AI more deeply into their operations but also becoming increasingly aware of the associated risks. While mentions of AI in the business operations sections of filings doubled during this period, risk disclosures grew at an even faster pace, indicating that firms recognize AI as both a strategic driver and a significant source of potential liability. Notably, 26% of companies in 2024 reported AI-related risks even when they did not reference AI as part of their core operations.

Industry-specific trends add nuance to this overall picture. Technology firms such as Microsoft, Apple, NVIDIA, and Alphabet lead the way in both operational and risk disclosures, with over 75% of companies in the sector reporting AI-related content. Non-tech sectors, including finance, pharmaceuticals, and energy, are also joining this trend, often with filings that focus more on risks than on operational benefits, signaling growing awareness of AI’s disruptive potential even outside traditional tech domains.

What companies are disclosing about AI risks

To understand the content of these disclosures, the researchers conducted a qualitative review of a diverse sample of 50 companies, including top tech firms, random companies from various industries, and firms that mentioned AI only in their risk disclosures for the first time in 2024.

The analysis categorizes AI risks into four main groups: legal, competitive, reputational, and societal.

Legal risks dominate the disclosures, driven by concerns over regulatory uncertainty, compliance costs, and potential liability. Many companies cite the growing complexity of regulatory environments, particularly in light of the European Union’s AI Act and the U.S. Securities and Exchange Commission’s (SEC) repeated warnings about misleading AI claims, commonly referred to as “AI washing.”

Competitive risks are also prominent, especially among major technology companies engaged in intense competition for AI leadership. Firms express concern over the high costs of AI research and deployment, the risk of losing market share to faster-moving competitors, and the challenges of sustaining innovation in a rapidly evolving field.

Reputational risks are frequently cited but often in general terms, with companies acknowledging that missteps in AI development or deployment could damage public trust or brand image.

Societal risks, though less frequently detailed, are gaining attention. Companies report threats ranging from AI-facilitated cyberattacks and fraud to privacy and security vulnerabilities, as well as the technical limitations of AI systems, such as bias, lack of transparency, and reliability issues. Interestingly, while tech firms are more likely to discuss issues like misinformation risks, non-tech firms more often acknowledge technical limitations and operational risks associated with AI.

However, the study notes significant omissions. Few companies explicitly address AI’s environmental impact, potential labor market disruptions, or the risks associated with advanced AI capabilities. This gap underscores the uneven depth of current corporate risk disclosures and suggests that many firms are reluctant to engage publicly with the broader societal implications of AI.

Regulatory pressures and the path ahead

The surge in AI disclosures coincides with mounting regulatory and investor scrutiny. The SEC has issued multiple warnings against exaggerated or misleading statements about AI in corporate filings, emphasizing the need for accurate, company-specific information. Recent enforcement actions and shareholder lawsuits have underscored the legal and financial risks of inadequate or misleading AI-related disclosures.

Despite these pressures, the study reveals a persistent reliance on vague or boilerplate language in filings. Companies often describe AI risks in hypothetical terms, externalizing responsibility by framing challenges as the result of third-party misuse or external threats, rather than their own operational practices. This trend mirrors patterns observed in earlier studies of cybersecurity disclosures, where companies initially downplayed risks until regulatory interventions prompted more detailed reporting.

The researchers argue that the findings support the case for more specific and standardized disclosure requirements for AI, similar to the SEC’s evolving rules on cybersecurity. Clearer guidance could drive greater transparency and accountability, ensuring that investors and stakeholders have a more accurate understanding of the risks posed by AI integration.

The study also points to a need for international comparisons. With the European Union’s AI Act and Digital Services Act introducing stricter requirements for AI risk reporting and governance, future analyses could explore how disclosure practices evolve in response to these regulatory frameworks and whether they drive more detailed and actionable risk communication.

- FIRST PUBLISHED IN:

- Devdiscourse