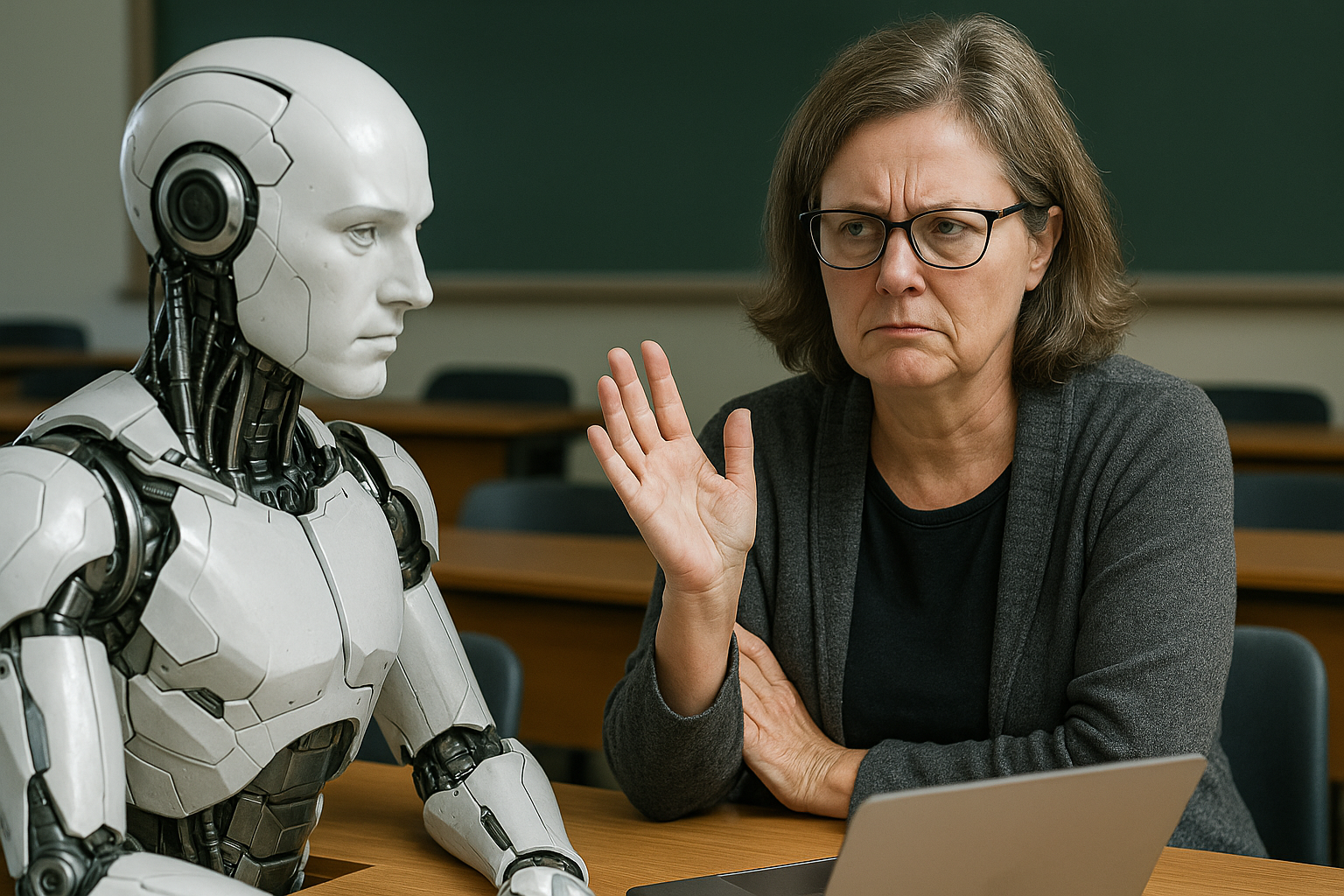

Educators opting out of AI over fears of creativity erosion and academic dishonesty

“Identity in Tension” emerged as a particularly profound theme. Faculty who see their scholarly identity as deeply tied to original intellectual labor reported discomfort with delegating any part of that process to artificial intelligence. For these individuals, GAI represents a threat to authenticity, creativity, and academic integrity. Some worried that the use of GAI might damage their credibility with students or colleagues, or reduce their own fulfillment derived from the academic process.

A growing number of university faculty are rejecting generative AI technologies, not out of ignorance, but due to ethical objections, identity tensions, and skepticism over its academic value, according to a new study published in Frontiers in Communication.

Titled “Opting Out of AI: Exploring Perceptions, Reasons, and Concerns Behind Faculty Resistance to Generative AI”, the study by Aya Shata investigates the motivations behind higher education faculty’s decision not to use GAI tools. Drawing from a survey of 294 faculty members across two U.S. public universities, the research employs Innovation Resistance Theory to uncover the functional and psychological barriers that inhibit adoption.

What prevents faculty from using generative AI?

The study identifies five core themes that define faculty resistance to GAI. The most frequently cited reason was a sense of being "Not Ready, Not Now." Many faculty members admitted lacking the time, information, or familiarity necessary to engage meaningfully with these rapidly evolving technologies. Compounding this is a perception that the landscape of generative AI is too unregulated and ill-defined for effective integration into academic work.

A second barrier, “No Perceived Value,” captured sentiments that GAI does not provide meaningful or discipline-relevant benefits. Faculty in humanities and social sciences, who made up the study's sample, expressed skepticism about whether GAI could enhance their academic or pedagogical processes. Several respondents reported concerns that GAI promotes dependency and undermines critical thinking.

“Identity in Tension” emerged as a particularly profound theme. Faculty who see their scholarly identity as deeply tied to original intellectual labor reported discomfort with delegating any part of that process to artificial intelligence. For these individuals, GAI represents a threat to authenticity, creativity, and academic integrity. Some worried that the use of GAI might damage their credibility with students or colleagues, or reduce their own fulfillment derived from the academic process.

A fourth category, “Threat to Human Intelligence,” reflected concerns about broader intellectual consequences. Faculty voiced fears that overreliance on AI would devalue human ingenuity and lead to an erosion of critical discourse. They argued that creativity, nuance, and ethical reasoning, hallmarks of scholarly work, are not replicable by machines.

Finally, the theme “Future Fears and Present Risks” encapsulated a range of ethical and societal concerns, from labor exploitation in AI training processes to the misuse of copyrighted material and the amplification of misinformation. Non-users were particularly attuned to the possible dystopian outcomes of unchecked AI adoption, describing the technology as a “Pandora’s box” and “dystopian” in tone.

How do users and non-users differ in their concerns?

The study’s quantitative data revealed that both faculty users and non-users of GAI share some concerns, particularly around plagiarism, copyright infringement, and accountability—but diverge significantly in focus. Non-users were more concerned with originality, ethical implications, and the threat to human creativity. Users, meanwhile, were primarily focused on accuracy, reliability, and the risk of biased or “hallucinated” outputs.

A significant statistical finding was that non-users reported lower overall comfort with technology, a key factor influencing their behavioral intention to use GAI in the future. General tech comfort emerged as the only significant predictor of a non-user’s likelihood to adopt GAI, outweighing even their stated concerns about the technology itself. This suggests that broader digital literacy may play a decisive role in future adoption patterns across academia.

Interestingly, the concern that GAI might lead to job displacement, often cited in public debates, was not a major issue for faculty in this study. Both users and non-users ranked this among the least pressing worries, possibly because academic roles often involve complex, human-centered responsibilities that current AI tools are not equipped to replicate.

What are the implications for higher education and policy?

The study challenges existing models of technology adoption by showing that faculty resistance is not solely about practicality or ease of use. Instead, it reflects a confluence of ethical, professional, and identity-based considerations. This nuanced resistance cannot be addressed through technical training alone or by highlighting GAI’s benefits.

For institutional leaders and policymakers, these findings carry important implications. Efforts to increase adoption must account for emotional and moral dimensions of faculty resistance. That means going beyond standard compliance protocols and integrating transparent ethical guidelines, participatory policy development, and values-based communication.

Universities could also benefit from creating forums for dialogue around AI’s role in education, rather than issuing top-down directives. These platforms could empower skeptical faculty to express their concerns, contribute to institutional strategy, and feel ownership over AI governance structures.

Training programs should not only focus on functional skills but also help faculty navigate the moral and pedagogical trade-offs associated with GAI. As faculty members are central to shaping student engagement, their buy-in is essential for sustainable AI integration in teaching and research.

Moving forward, the study calls for expanded research across a wider range of institutions, particularly in STEM disciplines and international contexts. It also advocates for longitudinal studies to monitor how faculty attitudes evolve as GAI becomes more embedded in academic culture.

- FIRST PUBLISHED IN:

- Devdiscourse