AI in the Classroom: Opportunities and Risks for Students with Special Education Needs

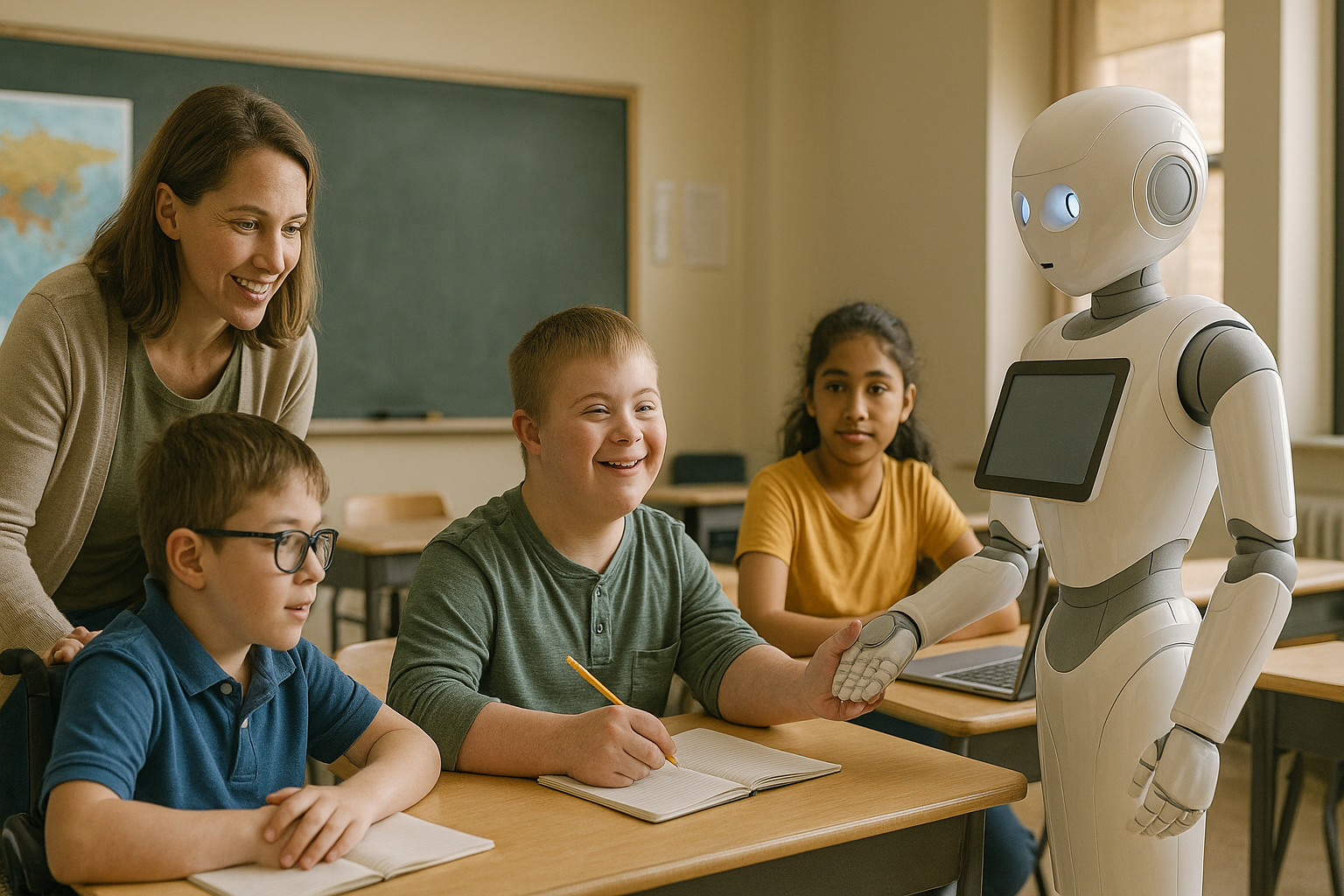

The OECD report argues that artificial intelligence, when ethically designed and carefully governed, can support students with special education needs by providing personalized tools for learning, communication, and accessibility. It warns, however, that without strong safeguards around privacy, bias, cost, and accountability, AI risks deepening the very inequities it seeks to solve.

The OECD’s latest report, produced with research contributions from the National AI Institute for Exceptional Education, the Learning Engineering Virtual Institute, and European university consortia, makes a persuasive argument that artificial intelligence could be transformative in supporting students with special education needs, provided it is rolled out responsibly. Students with disabilities remain among the most disadvantaged in terms of education and employment across the OECD, with girls and women facing even greater inequities. Although mainstreaming children with disabilities into general classrooms has been shown to improve both academic and social outcomes without harming peers, the stubborn persistence of achievement gaps demands fresh solutions. Against this backdrop, the report asks a crucial question: can AI bridge those divides without worsening risks of bias, privacy breaches, or environmental burdens?

Mapping Needs and Technologies

The report is careful in its definitions. Special education needs cover a wide range of conditions, including dyslexia, dysgraphia, dyscalculia, autism spectrum disorders, ADHD, and physical or sensory impairments. Artificial intelligence is described not as a single technology but as a spectrum of tools, from traditional machine learning to large language models. These technologies are already quietly present in classrooms, acting as tutors, research assistants, or accessibility layers. The authors stress that any responsible application must be rooted in an understanding of both possibilities and limits, urging educators and policymakers to look past hype and focus on evidence.

To illustrate how AI is being applied, the report highlights several tools already in use or under evaluation. Dytective, for instance, uses linguistic data and machine learning to screen for dyslexia and then trains literacy skills through adaptive games. Studies have shown stronger reading gains when children used DytectiveU alongside professional training. BESPECIAL, created by a university consortium, integrates self-questionnaires, clinical data, and virtual reality tasks to predict support needs and suggest tailored strategies, while the Learning Engineering Virtual Institute’s chatbot delivers research-backed advice to parents and teachers, drawing from repositories like the What Works Clearinghouse. Tools such as KOBI also enhance privacy by offering speech recognition entirely offline.

Emerging Solutions for Learning Challenges

Dysgraphia has fewer validated AI solutions, but Dynamilis shows promise. Using a stylus and tablet, it captures fine details of handwriting such as pressure and speed, then prescribes targeted games to improve motor control. Early research reports detection accuracy of over 90 percent. Dyscalculia tools are further ahead, with Calcularis 2.0 personalizing instruction through a Bayesian network of hundreds of math skills. A randomized controlled trial showed greater arithmetic gains compared to regular teaching, with effects persisting months later.

For physical impairments, AI builds on established assistive technologies. The aiD project uses deep learning to translate sign language into text and vice versa, while UNICEF’s Accessible Digital Textbooks project employs generative AI to produce multiple accessible versions of standard textbooks, later reviewed by human experts. Pilots in Jamaica, Paraguay, and East Africa revealed improved participation and motivation among students. Tools like mDREET, which tunes mobile hearing aids to a child’s specific profile, and text-to-speech systems such as ReadSpeaker, also enhance classroom access. Speech impairments are being tackled by the National AI Institute for Exceptional Education, which is testing an AI Screener to detect developmental issues and an Orchestrator to recommend interventions. Voiceitt, another example, learns to recognize atypical speech patterns and enables clear communication across apps, with pilot studies showing encouraging results.

Autism and ADHD are also focal points. Kiwi, a socially assistive robot, uses reinforcement learning to personalize interactions and detect engagement, helping children with autism improve both math skills and social behaviors. The ECHOES project blends an intelligent virtual agent with practitioner support, boosting spontaneous communication among autistic children. For ADHD, augmented reality and biofeedback games are being trialed to strengthen focus and self-regulation, though researchers caution about overreliance on screen-based tools that may add distractions.

Risks, Perceptions, and Safeguards

While enthusiasm for AI is rising among teachers and school leaders, concerns remain widespread. Educators fear inaccurate outputs, overdependence, and inequities in access. Parents worry about privacy, accountability, and the potential misuse of sensitive data. The report lists a wide range of risks: opaque data pipelines, biometric surveillance, underregulated consent for children, algorithmic bias that reinforces stereotypes, techno-ableism that frames disabilities as deficits to “fix,” unpredictable costs, and the heavy environmental footprint of large AI models. The authors look to the health sector for guidance, citing the use of transparent model cards, secure secondary data sharing, and rigorous ethics oversight as practices that education could adapt.

Policy Imperatives for Responsible Use

The report translates the analysis into four clear policy imperatives. First, ethical design and use: fairness audits, transparency about purpose and data, accountability structures, and a reckoning with environmental impacts. Second, robust research and monitoring: comparative studies to test AI against traditional interventions, long-term tracking of outcomes, and open sharing of results. Third, stronger protections around data: secure sharing methods, privacy-preserving techniques, and active involvement of students with special education needs and their families in governance. Fourth, common accountability frameworks and certification systems, akin to “nutrition labels,” that disclose intended uses, required training, and evidence of effectiveness.

The closing note is both pragmatic and optimistic. With well-designed guardrails, meaningful involvement of students and teachers, and investment in evidence-driven tools, artificial intelligence could genuinely enhance inclusive education. Without such measures, however, it risks compounding the very inequities it aims to solve. The message is clear: technology alone cannot create fairness, but with foresight and responsibility, it can be a powerful ally in building more equitable classrooms for the future.

- FIRST PUBLISHED IN:

- Devdiscourse

ALSO READ

MiG 21 is not only an aircraft or machine but also proof of deep India-Russia ties: Defence Minister Rajnath Singh in Chandigarh.

Empowering Bihar: Modi's Women-Centric Campaign Boost

I pay homage to those who lost lives in rain-related incidents in Bengal: Amit Shah at Durga Puja inauguration in Kolkata.

Turkish Airlines Expands Fleet with Major Boeing Acquisition

Britain Introduces Mandatory Digital ID to Tackle Illegal Immigration