Student engagement rises with generative AI, but risks of overreliance loom

year. Academic tasks such as information retrieval, essay drafting, and problem-solving topped the list of applications, surpassing recreational or daily uses. Students found AI tools especially helpful in managing workloads, clarifying complex concepts, and boosting confidence in tackling difficult assignments.

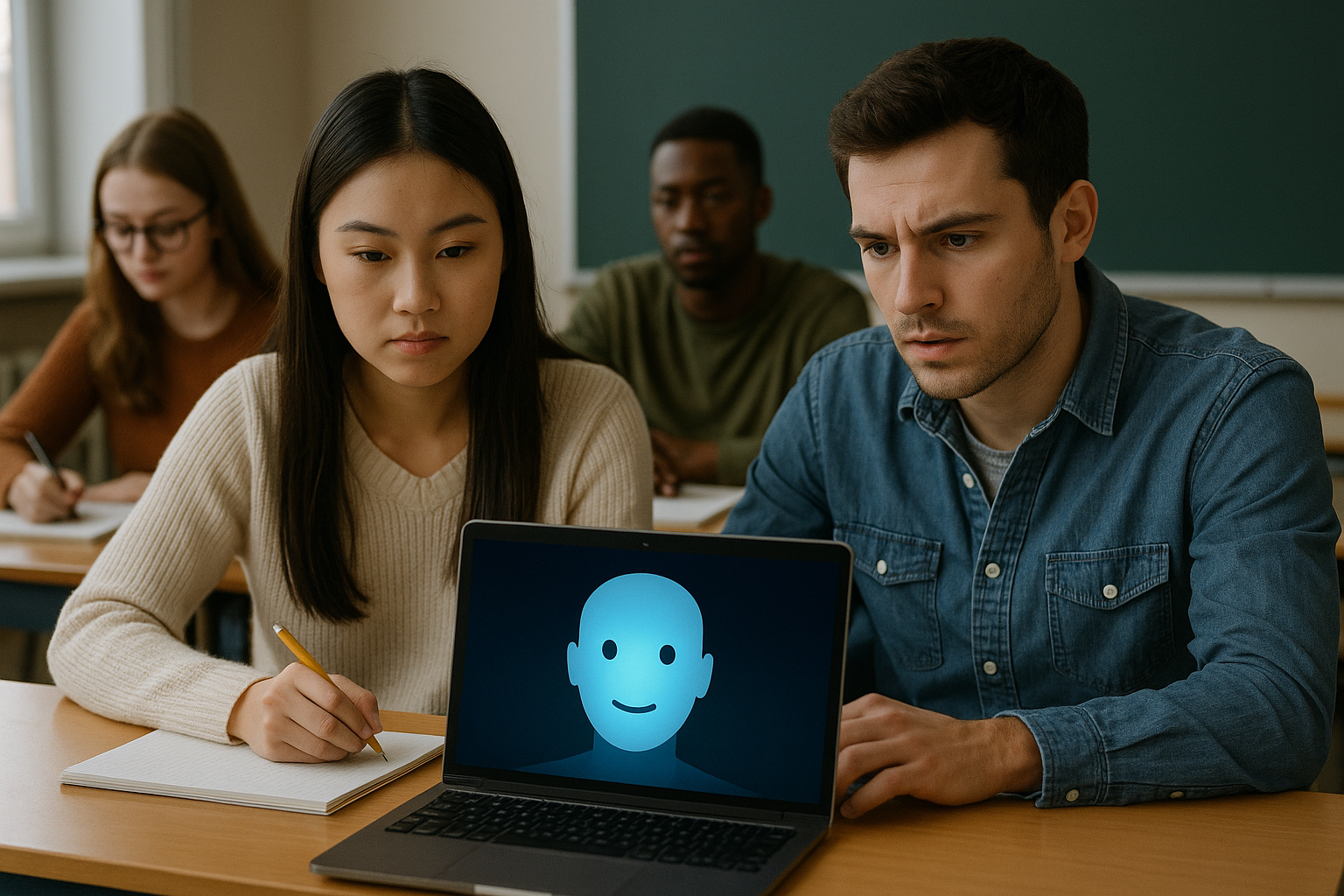

The integration of generative AI (GenAI) into higher education is reshaping the way students learn, interact, and stay engaged with academic content. A new large-scale study published in Behavioral Sciences uncovers the nuanced role GenAI plays in influencing student engagement and provides crucial evidence on both the benefits and risks of AI-assisted learning.

Based on data from more than 72,000 undergraduate students across 25 universities in China, the research “Whether and When Could Generative AI Improve College Student Learning Engagement?” suggests that while GenAI can enhance cognitive and emotional engagement, its effects on behavioral participation are mixed. This raises critical questions for educators and policymakers seeking to balance innovation with meaningful learning outcomes.

How is generative AI changing student learning behaviors?

The study reveals a widespread adoption of GenAI among undergraduates, with more than 64% reporting its use during the 2023–2024 academic year. Academic tasks such as information retrieval, essay drafting, and problem-solving topped the list of applications, surpassing recreational or daily uses. Students found AI tools especially helpful in managing workloads, clarifying complex concepts, and boosting confidence in tackling difficult assignments.

However, the findings also point to a downside. While cognitive engagement and emotional resilience improved, active behavioral participation, such as class discussion, collaboration, and self-directed learning, showed signs of decline in some contexts. This suggests that students may be relying too heavily on AI tools, reducing their willingness to actively engage with peers and instructors.

The research underscores that AI is not inherently good or bad; its impact depends on how and why students use it. When used as a supplement to human instruction, GenAI can empower students to reach higher levels of academic performance. But when it replaces active participation, the technology risks diminishing essential learning behaviors.

Under what conditions does AI enhance engagement?

GenAI’s positive effects were strongest in courses where learning environments were both high in challenge and high in support. In these settings, AI served as an additional resource, enabling students to overcome academic hurdles without reducing their involvement. The structured guidance of instructors and the demanding nature of coursework created conditions where students could leverage AI effectively.

On the other hand, courses characterized by low challenge but high support were more prone to negative outcomes. Here, the ease of AI use appeared to encourage passivity, reducing motivation and fostering a dependence on automated solutions. This finding is particularly relevant as universities consider integrating AI tools into curriculum design. Without careful planning, the technology could inadvertently widen engagement gaps rather than close them.

The study also indicates that GenAI benefits differ across students. Those with strong self-regulation skills and high motivation levels derived more value from AI, while students who lacked these attributes were more likely to disengage. This suggests that AI adoption strategies must be tailored to diverse student needs rather than applied uniformly.

What does this mean for the future of higher education?

The findings raise broader questions about the evolving role of technology in higher education. The authors point out that GenAI should not be viewed as a replacement for traditional teaching methods but as a strategic tool to enhance learning experiences. For institutions, this means investing in faculty training, developing AI-integrated instructional designs, and creating policies that encourage responsible use.

From a development perspective, the study aligns with the growing movement toward personalized and technology-enhanced learning. However, it also cautions against a one-size-fits-all approach. Overreliance on GenAI could exacerbate disparities, particularly for students lacking digital literacy or self-directed learning skills.

Moreover, the findings call for clear ethical guidelines regarding AI in education. Concerns about plagiarism, academic integrity, and the authenticity of learning outcomes must be addressed as AI tools become more deeply embedded in academic environments. Universities will need to establish standards that not only regulate AI use but also promote its role as an enhancer of, rather than a substitute for, human learning.

- FIRST PUBLISHED IN:

- Devdiscourse