Explainable AI bridges trust gap between clinicians and algorithms

Despite the growing adoption of XAI in CDSSs, the study points to significant usability and validation challenges that limit broader clinical integration. One of the most striking findings is that only a small proportion of studies incorporate clinician feedback or perform usability testing in real-world environments. This gap highlights a disconnect between the development of advanced algorithms and their practical deployment in clinical settings.

The rapid expansion of artificial intelligence in healthcare is transforming diagnostics, risk assessment, and treatment planning across medical disciplines. Yet, as these systems gain traction, questions about their transparency and usability remain pressing. In a new meta-analysis, researchers explore these issues in depth.

Their study, “Explainable AI in Clinical Decision Support Systems: A Meta-Analysis of Methods, Applications, and Usability Challenges,” published in Healthcare, provides a comprehensive examination of the methods, applications, and gaps in the integration of explainable artificial intelligence (XAI) within clinical decision support systems (CDSSs).

How Explainable AI is shaping clinical decision support

The meta-analysis reviewed 62 peer-reviewed studies published between 2018 and 2025, offering a broad look at how XAI technologies are embedded in clinical workflows. The research underscores the central role of explainability in fostering trust between clinicians and AI systems, particularly when algorithms influence high-stakes medical decisions.

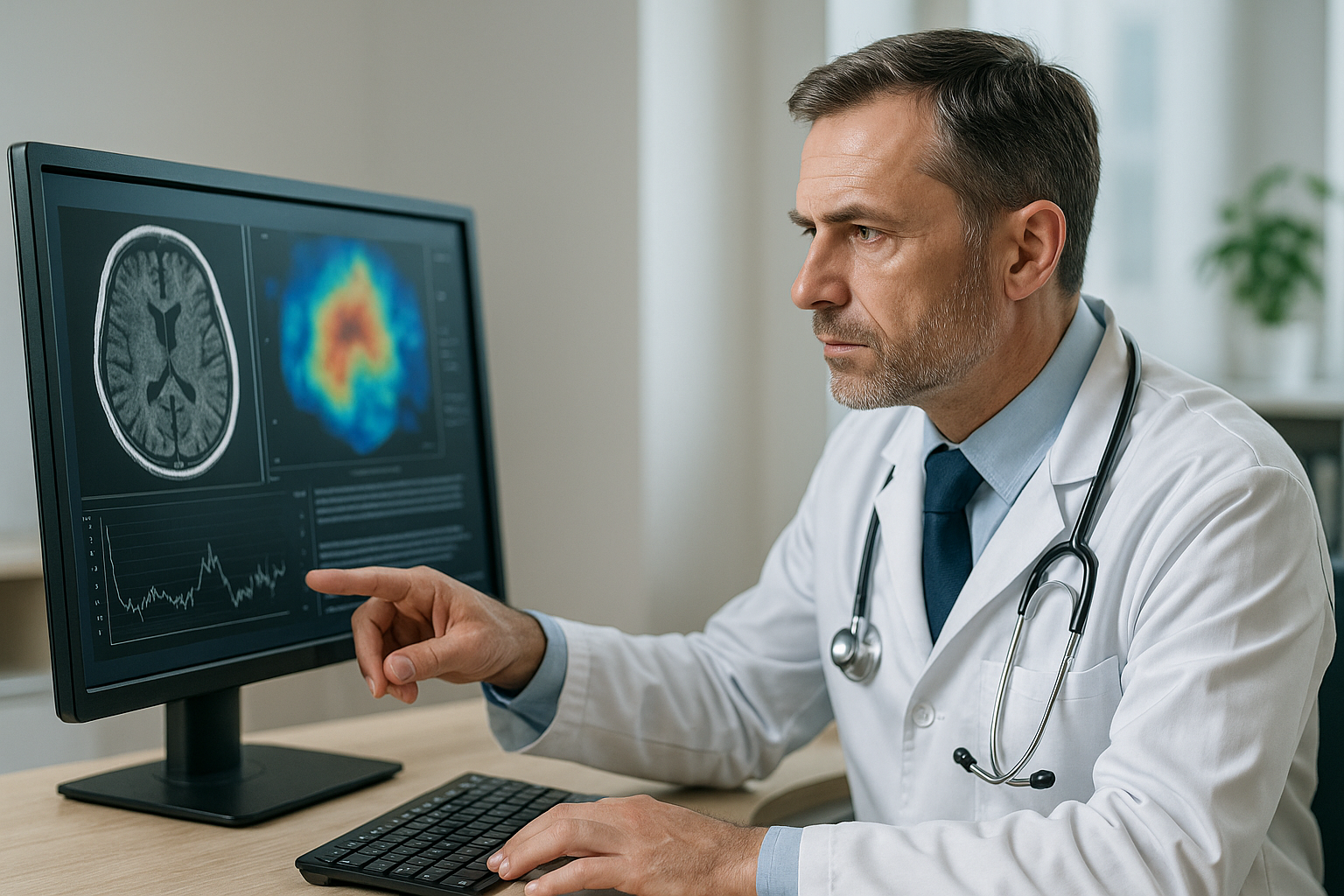

According to the findings, model-agnostic techniques dominate current practice. Methods like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-Agnostic Explanations) are widely adopted for tasks involving structured clinical data, such as predicting patient outcomes or supporting risk stratification. In imaging-intensive specialties such as radiology, oncology, and neurology, visual explanation tools like Grad-CAM (Gradient-weighted Class Activation Mapping) and attention-based models are frequently deployed to provide interpretable heatmaps and feature highlights that align with clinical reasoning.

According to the study, these methods are not only enhancing the accuracy and transparency of diagnostic tools but are also enabling better alignment with clinical workflows. XAI frameworks allow clinicians to cross-check algorithmic recommendations against their expertise, creating a collaborative environment where human judgment and machine intelligence complement each other.

Usability challenges and gaps in adoption

Despite the growing adoption of XAI in CDSSs, the study points to significant usability and validation challenges that limit broader clinical integration. One of the most striking findings is that only a small proportion of studies incorporate clinician feedback or perform usability testing in real-world environments. This gap highlights a disconnect between the development of advanced algorithms and their practical deployment in clinical settings.

Moreover, the research reveals that standardized metrics for evaluating explanation quality are largely absent. Without uniform benchmarks, it is difficult to compare the effectiveness of different XAI techniques or to measure their impact on clinical decision-making. This lack of standardization raises concerns about the reliability and reproducibility of AI-driven recommendations in patient care.

Another critical issue identified is the limited focus on human-in-the-loop trials. While many systems demonstrate strong performance in controlled environments or retrospective datasets, very few have been tested in prospective clinical workflows where human interaction is continuous and dynamic. This shortfall raises questions about the readiness of many AI tools for real-world deployment and underscores the need for participatory design approaches that actively involve clinicians in system development and evaluation.

The study also points to gaps in regulatory alignment and ethical oversight. As health systems accelerate the integration of AI, ensuring compliance with data governance frameworks and patient privacy laws is vital. Without robust governance, the risk of algorithmic bias and unintended harm increases, undermining trust in AI-driven systems.

Pathways for effective integration and trust

To address these gaps, the authors propose several strategies to make XAI-enabled CDSSs both reliable and clinically relevant. Central to their recommendations is the development of standardized evaluation frameworks that establish clear criteria for measuring explanation quality, usability, and clinical utility. These frameworks, they argue, are essential for advancing from research prototypes to scalable, regulatory-compliant tools.

The study introduces the Task–Modality–Explanation Alignment (TMEA) framework, a structured approach for selecting the most appropriate XAI method based on the type of clinical task, data modality, and desired level of interpretability. This framework is designed to streamline the integration of XAI systems into clinical practice by ensuring that the technology aligns with the specific needs and workflows of different medical specialties.

In addition, the authors call for a greater emphasis on participatory design and human-centered approaches. Involving clinicians, data scientists, and ethicists in the development process can help identify usability bottlenecks early and ensure that the systems are intuitive and aligned with clinical reasoning. This collaborative approach also enhances trust and promotes the adoption of AI tools in daily practice.

Longitudinal validation studies are another priority. The research emphasizes that real-world testing, involving continuous feedback loops and performance monitoring, is key to refining algorithms and ensuring that they remain robust in dynamic healthcare environments. Such studies can also shed light on the long-term impact of XAI on patient outcomes, workflow efficiency, and clinical decision-making.

- FIRST PUBLISHED IN:

- Devdiscourse