New framework redefines how AI augments or replaces human roles

The fRAme approach grounds the evaluation in the theory of dispositions, which allows both human abilities and technological capacities to be described in comparable terms. By focusing on specific tasks and contexts, the framework moves away from broad generalizations and encourages case-by-case analysis.

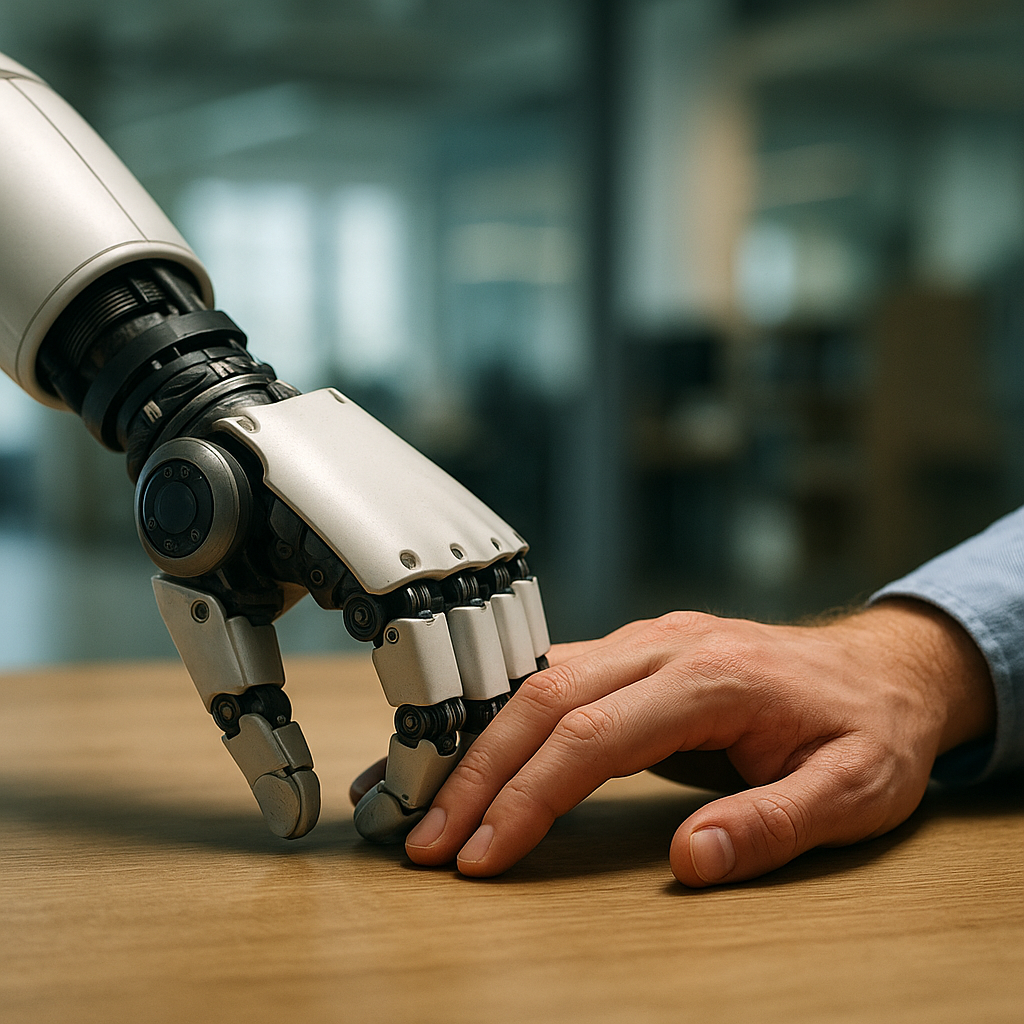

A team of researchers has proposed a new way to evaluate how autonomous intelligent systems affect human roles, offering a path to more transparent and responsible AI adoption. The study, titled “fRAme: An Evaluation Framework for Human Augmentation or Replacement by Autonomous Intelligent Systems” and published in AI & Society, targets a long-standing debate in AI development: whether AI-driven technologies support and enhance human abilities or displace them

By introducing a structured framework for examining both the technology and the human skills involved in a given task, the authors aim to provide a practical tool for designers, policymakers, and industry leaders who face critical decisions about AI deployment.

Defining the line between augmentation and replacement

The first key issue the paper addresses is the lack of a clear definition for augmentation versus replacement. Public discussions often cast AI in opposing terms, either as a partner that helps people do more or as a competitor that makes them redundant. In practice, however, the impact varies across domains and stakeholders, and outcomes are rarely one-dimensional.

The authors highlight that this ambiguity hinders policy-making and slows responsible adoption of AI systems. They argue that without a shared conceptual and methodological approach, it is impossible to assess whether a system benefits or harms the workforce and society.

The fRAme approach grounds the evaluation in the theory of dispositions, which allows both human abilities and technological capacities to be described in comparable terms. By focusing on specific tasks and contexts, the framework moves away from broad generalizations and encourages case-by-case analysis.

A structured approach to AI evaluation

The second major issue explored in the study is how to operationalize the assessment of AI’s impact. The fRAme framework organizes the evaluation around four core elements:

- The context (Γ) where the system operates.

- The abilities of the technology in question.

- The abilities of the stakeholder or human agent affected.

- The task that links them.

This shared language is intended to make assessments transparent and reproducible across diverse scenarios.

To help analysts describe the abilities of an autonomous system, the authors incorporate an adapted version of the LENS evaluation tool. This tool supports mapping of abilities and sub-abilities in a way that aligns with the fRAme framework, allowing evaluators to judge more precisely whether a system enhances, complements, or replaces human capabilities.

The paper also demonstrates how the same AI system can have different effects on various stakeholders in the same context. For example, a single automated platform might augment certain roles by easing routine tasks while replacing others that rely on the same skill set. This underscores the need for a nuanced approach to evaluation.

Implications for Policy and Responsible AI

The third major issue the authors address is the practical use of such evaluations for shaping policies and industry standards. They point out that previous studies often treat replacement as negative and augmentation as inherently positive. However, the real impact depends on the specific stakeholder, task, and context.

By applying fRAme, decision-makers can gain a clearer understanding of these dynamics and design interventions, whether regulatory, organizational, or educational, that address actual needs and risks.

The framework’s emphasis on task-specific and stakeholder-specific analysis provides a basis for dialogue among developers, employers, workers, and regulators. It moves the debate from abstract arguments to concrete evidence of how technology changes abilities in practice.

The authors argue that this structured approach can help guide investments in AI development, ensuring that innovation supports human-centered goals and avoids unintended harm.

- FIRST PUBLISHED IN:

- Devdiscourse