Explainable AI bridges accuracy and accountability in combating deepfakes

The paper points out that without explainability, even high-performing models can be easily dismissed or misused, creating openings for misinformation to thrive. The authors argue that explainability not only strengthens accountability but also enhances the resilience of detection systems against adversarial attacks and evolving deepfake techniques.

A new study points out the urgent need for transparent, explainable artificial intelligence to combat the growing threat of deepfakes, which are increasingly undermining trust in digital content. The paper published in Cryptography provides the first comprehensive review of explainable AI (XAI) techniques designed to improve the reliability and accountability of deepfake detection in high-stakes domains such as forensics, journalism, and online content moderation.

The authors warn that while current deepfake detectors have made impressive strides in accuracy, their black-box nature leaves them unfit for use in scenarios requiring verifiable evidence.

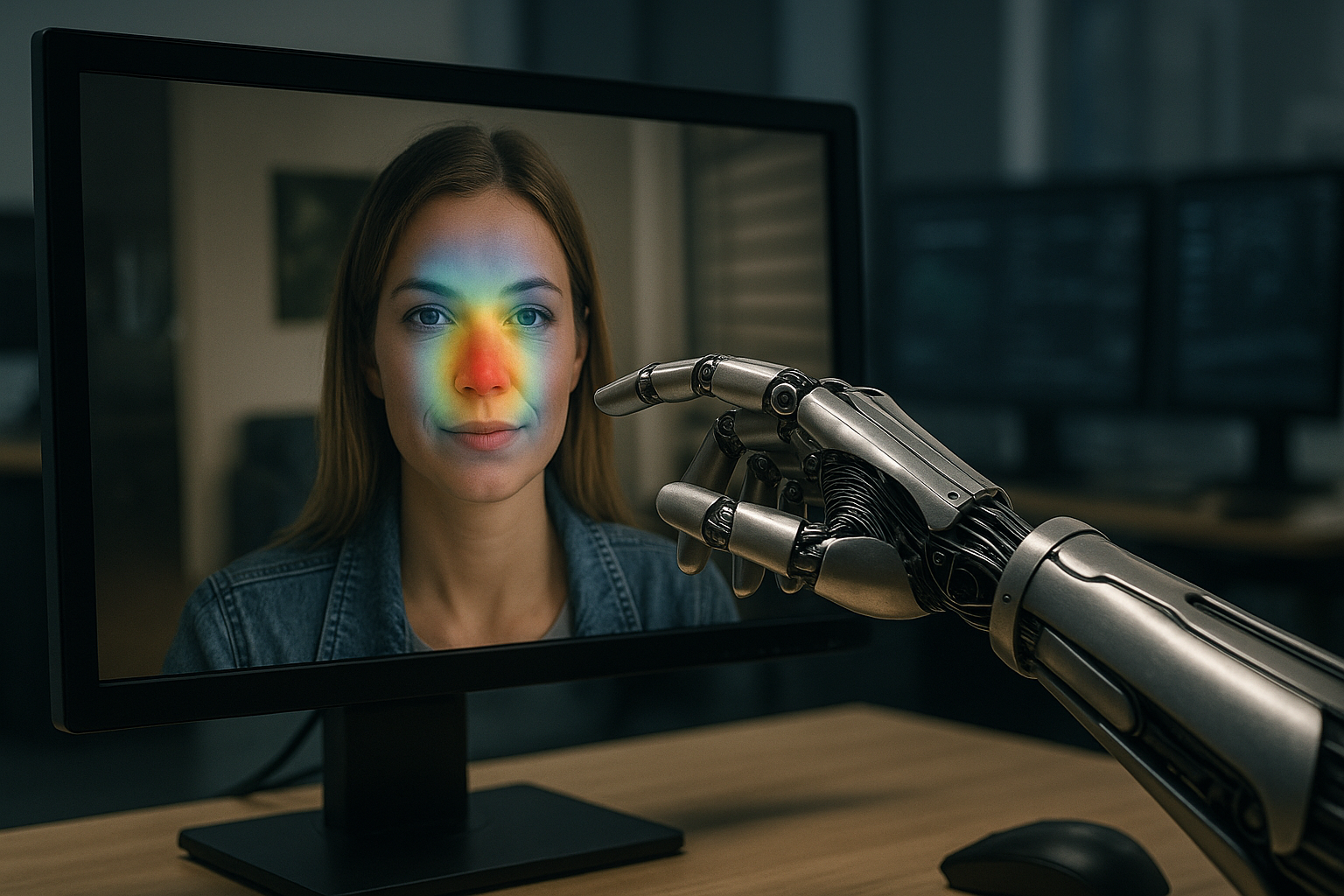

Titled “From Black Boxes to Glass Boxes: Explainable AI for Trustworthy Deepfake Forensics,” the review highlights a paradigm shift toward explainable, or “glass-box,” models that provide interpretable insights into how detection systems reach their decisions, bridging the gap between technical performance and societal trust.

Why explainability matters in deepfake detection

Among others, the study primarily addresses the growing gap between the capabilities of existing deepfake detection models and the requirements of their real-world deployment. According to The authors, conventional detectors, often based on complex deep learning architectures, focus heavily on maximizing accuracy against benchmark datasets. However, they fail to show the reasoning behind their conclusions.

This lack of transparency makes it difficult for investigators, journalists, and policymakers to accept the outputs of these models as credible evidence. In legal or journalistic settings, stakeholders need more than just a label indicating whether a video is fake or real, they need to understand why the system reached that conclusion.

The paper points out that without explainability, even high-performing models can be easily dismissed or misused, creating openings for misinformation to thrive. The authors argue that explainability not only strengthens accountability but also enhances the resilience of detection systems against adversarial attacks and evolving deepfake techniques.

Advances in forensic and model-centric explainability

The study also explores the current state of explainable AI approaches for deepfake detection. The authors classify existing techniques into three broad categories.

The first category, forensic analysis-based methods, leverages physical and algorithmic traces left during manipulation. Techniques such as photo-response non-uniformity (PRNU) sensor noise detection, residual learning, and convolutional trace analysis identify subtle inconsistencies in images or videos. These methods provide interpretable evidence by pinpointing manipulated regions, making them valuable for forensic investigations.

The second category, model-centric methods, seeks to open up the decision-making process of deep learning models themselves. Tools like Gradient-weighted Class Activation Mapping (Grad-CAM) and SHAP visualizations highlight which regions or features influenced the model’s decision. Attention-based architectures and prototype-driven models, such as DPNet and PUDD, provide further interpretability by showing exemplar patterns that the model associates with authentic or manipulated content.

The third and emerging category involves multimodal and natural language explanations. Systems like TruthLens, M2F2-Det, SIDA, and LayLens combine forensic evidence with semantic reasoning to generate human-readable textual explanations. By translating technical detection processes into accessible language, these approaches aim to bridge the gap between technical experts and non-specialist stakeholders, such as legal authorities and journalists.

Building trust with datasets, benchmarks, and future directions

Next up, the study analyses how to make explainability robust and widely adopted. The authors highlight the importance of specialized datasets that include fine-grained annotations and textual explanations. Collections such as DDL, ExDDV, and DD-VQA provide benchmark resources that allow researchers to evaluate explainability alongside detection accuracy.

The paper calls for a shift from focusing solely on performance metrics to a more holistic approach that considers interpretability, resilience, and adaptability. The authors advocate for the development of hybrid detection systems that combine low-level forensic evidence with high-level natural language explanations. They also stress the need for causal reasoning frameworks to trace manipulation back to its origins and for continual learning models capable of adapting to new and evolving deepfake generation techniques.

Future progress, as the study envisions, will depend on collaborations between technologists, policymakers, and legal professionals. By creating tools that meet the evidentiary standards of courts and the ethical requirements of public communication, the field can move closer to delivering detection systems that are not just accurate but also trustworthy.

- FIRST PUBLISHED IN:

- Devdiscourse